Do you know how to secure your Storage Accounts? How about the differences between SAS keys and Access Keys? Some of you do but many of you don’t, so let me make an introduction to the differences.

This is a series of two posts, the first one covering Storage accounts and services and the second one will cover the security features.

Table of Contents

Different Storage Accounts types

The Azure Storage platform includes the following data services:

Azure Blobs: A massively scalable object store for text and binary data. Also includes support for big data analytics through Data Lake Storage Gen2.

Blob storage is ideal for:

- Serving images or documents directly to a browser.

- Storing files for distributed access.

- Streaming video and audio.

- Storing data for backup and restore, disaster recovery, and archiving.

- Storing data for analysis by an on-premises or Azure-hosted service.

Objects in Blob storage can be accessed from anywhere in the world via HTTP or HTTPS. Users or client applications can access blobs via URLs, the Azure Storage REST API, Azure PowerShell, Azure CLI, or an Azure Storage client library.

Azure Files: Managed file shares for cloud or on-premises deployments.

One thing that distinguishes Azure Files from files on a corporate file share is that you can access the files from anywhere in the world using a URL that points to the file and includes a shared access signature (SAS) token. You can generate SAS tokens; they allow specific access to a private asset for a specific amount of time.

File shares can be used for many common scenarios:

- Many on-premises applications use file shares. This feature makes it easier to migrate those applications that share data to Azure. If you mount the file share to the same drive letter that the on-premises application uses, the part of your application that accesses the file share should work with minimal, if any, changes.

- Configuration files can be stored on a file share and accessed from multiple VMs. Tools and utilities used by multiple developers in a group can be stored on a file share, ensuring that everybody can find them, and that they use the same version.

- Resource logs, metrics, and crash dumps are just three examples of data that can be written to a file share and processed or analyzed later.

Azure Queues: A messaging store for reliable messaging between application components.

The Azure Queue service is used to store and retrieve messages. Queue messages can be up to 64 KB in size, and a queue can contain millions of messages. Queues are generally used to store lists of messages to be processed asynchronously.

Azure Tables: A NoSQL store for schemaless storage of structured data.

Azure Table storage is now part of Azure Cosmos DB. To see Azure Table storage documentation, see the Azure Table Storage Overview. In addition to the existing Azure Table storage service, there is a new Azure Cosmos DB Table API offering that provides throughput-optimized tables, global distribution, and automatic secondary indexes.

Azure Disks: Block-level storage volumes for Azure VMs.

An Azure managed disk is a virtual hard disk (VHD). You can think of it like a physical disk in an on-premises server but virtualized. Azure-managed disks are stored as page blobs, which are a random IO storage object in Azure. We call a managed disk ‘managed’ because it is an abstraction over page blobs, blob containers, and Azure storage accounts. With managed disks, all you have to do is provision the disk, and Azure takes care of the rest.

And each service is accessed through a storage account.

What about Storage Accounts?

Storage accounts come in various different flavors.

| Type of storage account | Supported storage services | Redundancy options | Usage |

|---|---|---|---|

| Standard general-purpose v2 | Blob (including Data Lake Storage1), Queue, and Table storage, Azure Files | LRS/GRS/RA-GRS ZRS/GZRS/RA-GZRS2 | Standard storage account type for blobs, file shares, queues, and tables. Recommended for most scenarios using Azure Storage. Note that if you want support for NFS file shares in Azure Files, use the premium file shares account type. |

| Premium block blobs3 | Blob storage (including Data Lake Storage1) | LRS ZRS2 | Premium storage account type for block blobs and append blobs. Recommended for scenarios with high transactions rates, or scenarios that use smaller objects or require consistently low storage latency. Learn more about example workloads. |

| Premium file shares3 | Azure Files | LRS ZRS2 | Premium storage account type for file shares only. Recommended for enterprise or high-performance scale applications. Use this account type if you want a storage account that supports both SMB and NFS file shares. |

| Premium page blobs3 | Page blobs only | LRS | Premium storage account type for page blobs only. Learn more about page blobs and sample use cases. |

Data Lake Storage is a set of capabilities dedicated to big data analytics, built on Azure Blob storage. For more information, see Introduction to Data Lake Storage Gen2 and Create a storage account to use with Data Lake Storage Gen2.

2 Zone-redundant storage (ZRS) and geo-zone-redundant storage (GZRS/RA-GZRS) are available only for standard general-purpose v2, premium block blobs, and premium file shares accounts in certain regions. For more information, see Azure Storage redundancy.

3 Premium performance storage accounts use solid-state drives (SSDs) for low latency and high throughput.

You cannot change a storage account to a different type after it is created. To move your data to a storage account of a different type, you must create a new account and copy the data to the new account.

Shared access signatures (SAS)

There are two SAS keys in a storage account. This is for usability reasons, of you need to rotate the other key, the other one still works and your service don’t get downtime.

A shared access signature (SAS) is a URI that grants restricted access rights to Azure Storage resources. You can provide a shared access signature to clients who should not be trusted with your storage account key but whom you wish to delegate access to certain storage account resources. By distributing a shared access signature URI to these clients, you grant them access to a resource for a specified period of time.An account-level SAS can delegate access to multiple storage services (i.e. blob, file, queue, table). Note that stored access policies are currently not supported for an account-level SAS.

You have the following options to generate the connection string. You have to allow some of the three resource types to generate a connection string.

When you click Generate you will get a random string based on the settings you chose, one for each service type.

Let’s have a closer look at the File service SAS URL. Here you can see the ip-address and the start and the end times for the string generated.

And you can use this string to connect example with Azure Storage Explorer.

And choose either one.

Paste in the connection string that was generated.

And hit connect after reviewing the settings are correct.

And you can see the services under the connection we just made.

Access Keys

Access keys authenticate your applications’ requests to this storage account. Keep your keys in a secure location like Azure Key Vault, and replace them often with new keys. The two keys allow you to replace one while still using the other.

You have two keys.

And again you can connect to the Storage Account with the key and name of the account, like this.

Verify settings and connect.

Now you can see the connection we just made in the Explorer.

Shared Access Tokens

A shared access signature (SAS) is a URI that grants restricted access to an Azure Storage container. Use it when you want to grant access to storage account resources for a specific time range without sharing your storage account key.

You can find the Access tokens under the corresponding service but not Azure Files, there is an preview for a feature that allows you to add Azure AD users directly to Azure Files, yes without Azure Domain Services.

Generating the token is the same as with the Connection string so I won’t be going thru this one.

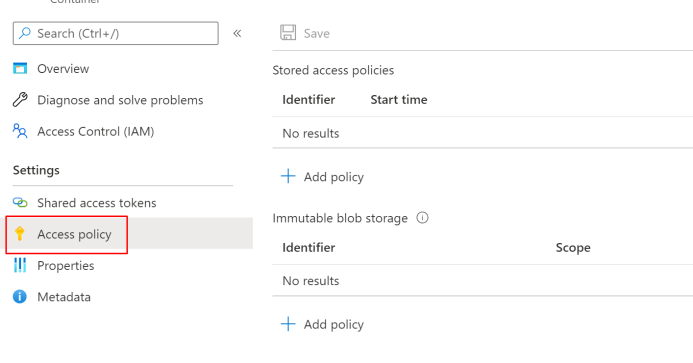

Access policy

You can also allow access to specific services with Access policies.

When you click Add policy you will get almost the same options than with Access keys.

You can add permissions and time limit for the policy.

Lifecycle management

With the lifecycle management policy, you can:

- Transition blobs from cool to hot immediately when they are accessed, to optimize for performance.

- Transition blobs, blob versions, and blob snapshots to a cooler storage tier if these objects have not been accessed or modified for a period of time, to optimize for cost. In this scenario, the lifecycle management policy can move objects from hot to cool, from hot to archive, or from cool to archive.

- Delete blobs, blob versions, and blob snapshots at the end of their lifecycles.

- Define rules to be run once per day at the storage account level.

- Apply rules to containers or to a subset of blobs, using name prefixes or blob index tags as filters.

Geo-replication

You can enable your geo-replication inside the configuration.

And now you can see two different Data centers inside the geo-replication.

Closure

In my example we connected with Azure Storage Explorer but you can do it also directly from your application or from different services.

Microsoft has a vast variety of services available inside Storage Accounts and in the next part I will show the security aspect that you must do with these publicly available services to protect them.