Table of Contents

Why?

Like we all know Identity is a hot topic after the MFA fatigue methods or discovering and attacking our resources as external or internal entities but the often overlooked aspect of security is inside the data layer.

You could leak data out and not even know it. Some things you should consider are how you transfer it, how do you encrypt it when in rest, who has access to it?

Will discover a bit on how Data factory works and what should you consider security wise.

What can you do with it?

Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and orchestrate data pipelines to move and transform data between various data stores and data sources. With Data Factory, you can:

- Extract data from various sources: Data Factory supports a wide range of data sources, including on-premises and cloud-based data stores, such as Azure SQL Database, Azure Blob Storage, and Azure Cosmos DB. You can use Data Factory to extract data from these sources using connectors and data movement activities.

- Transform and enrich data: Data Factory provides a variety of data transformation activities that you can use to clean, normalize, and enrich your data. You can use Data Factory to apply transformations such as aggregations, pivots, and joins to your data.

- Load data into destination stores: Data Factory allows you to load transformed data into a variety of destination stores, including Azure SQL Database, Azure Data Lake Storage, and Azure Cosmos DB.

- Schedule and automate data pipelines: Data Factory allows you to schedule and automate your data pipelines using triggers and orchestration activities. You can use Data Factory to run pipelines on a schedule, in response to an event, or on demand.

- Monitor and manage data pipelines: Data Factory provides a range of tools for monitoring and managing your data pipelines, including Azure Monitor, Azure Log Analytics, and Azure Resource Manager templates.

Overall, Azure Data Factory provides a powerful platform for integrating and transforming data from various sources and destinations, and automating data pipelines for efficient data management.

To understand better what needs to be secured, here is an excellent example of Enterprise Power BI solutions from Azure Architecture Center

How to secure it?

Here a list I compiled on the steps. This list is not complete but it gives a good starting point on the steps needed in many of the use cases organizations could have. Just make sure these will fit to your own deployments and add the ones that are needed on-top of these.

Azure Policy

First of all, apply Azure policies for your environment, here are some examples that I exported for easier reading.

| policyName | policyDescription | policyId |

| Azure Data Factory should use private link | Azure Private Link lets you connect your virtual network to Azure services without a public IP address at the source or destination. The Private Link platform handles the connectivity between the consumer and services over the Azure backbone network. By mapping private endpoints to Azure Data Factory, data leakage risks are reduced. Learn more about private links at: https://docs.microsoft.com/azure/data-factory/data-factory-private-link. | 8b0323be-cc25-4b61-935d-002c3798c6ea |

| Public network access on Azure Data Factory should be disabled | Disabling the public network access property improves security by ensuring your Azure Data Factory can only be accessed from a private endpoint. | 1cf164be-6819-4a50-b8fa-4bcaa4f98fb6 |

| Configure Data Factories to disable public network access | Disable public network access for your Data Factory so that it is not accessible over the public internet. This can reduce data leakage risks. Learn more at: https://docs.microsoft.com/azure/data-factory/data-factory-private-link. | 08b1442b-7789-4130-8506-4f99a97226a7 |

| Azure data factories should be encrypted with a customer-managed key | Use customer-managed keys to manage the encryption at rest of your Azure Data Factory. By default, customer data is encrypted with service-managed keys, but customer-managed keys are commonly required to meet regulatory compliance standards. Customer-managed keys enable the data to be encrypted with an Azure Key Vault key created and owned by you. You have full control and responsibility for the key lifecycle, including rotation and management. Learn more at https://aka.ms/adf-cmk. | 4ec52d6d-beb7-40c4-9a9e-fe753254690e |

| [Preview]: Azure Data Factory integration runtime should have a limit for number of cores | To manage your resources and costs, limit the number of cores for an integration runtime. | 85bb39b5-2f66-49f8-9306-77da3ac5130f |

| [Preview]: Azure Data Factory linked services should use system-assigned managed identity authentication when it is supported | Using system-assigned managed identity when communicating with data stores via linked services avoids the use of less secured credentials such as passwords or connection strings. | f78ccdb4-7bf4-4106-8647-270491d2978a |

| Configure private endpoints for Data factories | Private endpoints connect your virtual network to Azure services without a public IP address at the source or destination. By mapping private endpoints to your Azure Data Factory, you can reduce data leakage risks. Learn more at: https://docs.microsoft.com/azure/data-factory/data-factory-private-link. | 496ca26b-f669-4322-a1ad-06b7b5e41882 |

| [Preview]: Azure Data Factory should use a Git repository for source control | Enable source control on data factories, to gain capabilities such as change tracking, collaboration, continuous integration, and deployment. | 77d40665-3120-4348-b539-3192ec808307 |

| [Preview]: Azure Data Factory linked services should use Key Vault for storing secrets | To ensure secrets (such as connection strings) are managed securely, require users to provide secrets using an Azure Key Vault instead of specifying them inline in linked services. | 127ef6d7-242f-43b3-9eef-947faf1725d0 |

| SQL Server Integration Services integration runtimes on Azure Data Factory should be joined to a virtual network | Azure Virtual Network deployment provides enhanced security and isolation for your SQL Server Integration Services integration runtimes on Azure Data Factory, as well as subnets, access control policies, and other features to further restrict access. | 0088bc63-6dee-4a9c-9d29-91cfdc848952 |

| Configure private DNS zones for private endpoints that connect to Azure Data Factory | Private DNS records allow private connections to private endpoints. Private endpoint connections allow secure communication by enabling private connectivity to your Azure Data Factory without a need for public IP addresses at the source or destination. For more information on private endpoints and DNS zones in Azure Data Factory, see https://docs.microsoft.com/azure/data-factory/data-factory-private-link. | 86cd96e1-1745-420d-94d4-d3f2fe415aa4 |

| [Preview]: Azure Data Factory linked service resource type should be in allow list | Define the allow list of Azure Data Factory linked service types. Restricting allowed resource types enables control over the boundary of data movement. For example, restrict a scope to only allow blob storage with Data Lake Storage Gen1 and Gen2 for analytics or a scope to only allow SQL and Kusto access for real-time queries. | 6809a3d0-d354-42fb-b955-783d207c62a8 |

To see more try AzPolicyAdvertizer. This is an excellent site for finding which policies you should use based on your services.

Network

Use dedicated ExpressRoute and IPsec-tunnel to encrypt the traffic during transit.

Identity

Keeping the users that are not wanted disabled from logging in.

Use RBAC for access management

AAD allows you to control access to your Data Factory resources using Azure role-based access control (RBAC). You can also use AAD to enable multi-factor authentication (MFA) for added security.

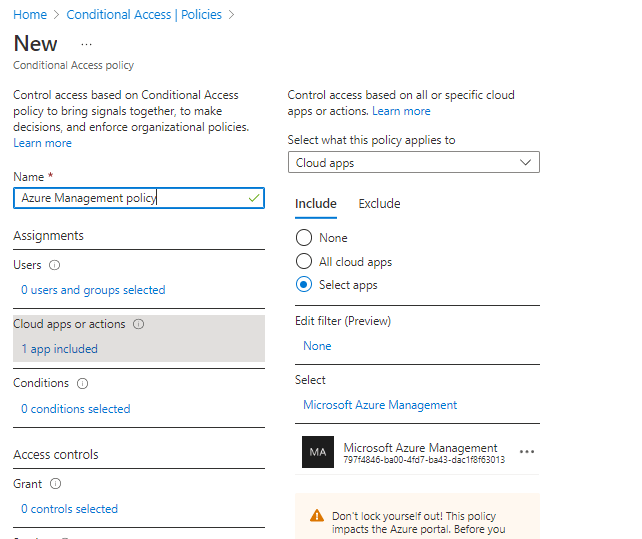

In example through Conditional Access policies, you could require a trusted location and a trusted device to manage Data factory.

See more information here.

Store credentials in Key vault

Keep your credentials inside Key vault for safe keeping. Example for on-premises SQL you can specify the name of the Azure Key Vault secret that stores the destined SQL server linked service’s connection string (e.g. Data Source=<servername>\<instance name if using named instance>;Initial Catalog=<databasename>;Integrated Security=False;User ID=<username>;Password=<password>;).

Infrastructure

Use encryption

Data Factory supports encryption at rest for all data stored in Azure Data Lake Storage Gen2 and Azure Blob Storage. You can also use Azure Key Vault to manage and rotate encryption keys for your data.

Enable network isolation

By default, Data Factory resources are isolated from other resources in your Azure subscription. You can further isolate your Data Factory resources by using virtual networks and subnets.

Use Customer-Managed keys to protect your Data factory

Quick tip! You have to encrypt the whole Data factory before adding any Linked services or Pipelines.

And copy the whole Key identifier path under the Key.

See more on Key vault and Managed HSM in my previous posts.

Use resource locks

Use resource locks to prevent accidental deletion or modification of critical Data Factory resources.

Use Azure Private Link

If you don’t use an completely Isolated mode, you can use Azure Private Link to securely access your Data Factory resources over a private network connection, rather than over the public internet.

Inside Data factory

Use System-managed Identities to give access to Linked resources.

Just make sure that your System-managed identities don’t have too much rights and that you use dedicated keys for different components inside your Infrastructure.

Using Purview

You can attach labels and policies directly to our content.

And you can also access Isolated Purview from Data factory.

Create Custom roles

Creating the following custom roles for accessing Data Factory instance. You can locate the JSON’s for these custom roles from here.

Use data masking

Data masking allows you to obscure sensitive data in your data pipelines by replacing it with fictitious data. This can help protect sensitive information from unauthorized access.

Hiding activity from logs

The activity’s output could turn into private data that needs to be displayed in plain text.

It’s possible that a lookup activity is accessing a source and returning personally identifiable content.

When the following are checked, output and input activity will not be captured in logging.

Monitor and audit activity

To keep an eye on and audit activity in your Data Factory environment, use Azure Monitor and Azure Log Analytics. Alerts that warn you of shady behavior or potential security risks can be put up.

You can also make Log analytics Isolated with

Additional ways make it more secure

See Azure Security Benchmark for Data factory here.

More on Data movement considerations from Microsoft Learn.

What Kind of attack paths there are?

There has been and will be, SynLapse is one example. It was already fixed by Microsoft, no worries but as data is more important for organizations, it will also be for attackers

Microsoft has addressed this one already

Closure

Organization concentrate to Securing normal users, admins and their workstation. There is a lot more to security than just that.

Like we see in this write-up, Data solutions need to secured also. There is always risks and the automated processes don’t even always reveal those gaps until it’s too late. Depending on the sector you are doing the solution in, there could monetary fines if you don’t comply to the regulations for the industry or Data protection.

Now it will be time for concentrate on family time, thanks all in the community for this year, let’s see again next year!

That in mind, have a very Merry Christmas and keep your loved ones close!

RSS - Posts

RSS - Posts